This is an Eval Central archive copy, find the original at medium.com/innovationnetwork.

How I applied emergent learning tools to connect evaluation, learning, and strategy

All too often evaluation, learning, and strategy are disconnected. How many times have you been involved in an evaluation that’s meticulously collecting data to answer questions that may no longer be relevant to the stakeholders involved? In 2019, I had the opportunity to participate in the Emergent Learning Certification Program sponsored by Fourth Quadrant Partners. At the start of this journey I was guided by curiosity and viewed emergent learning as a chance to expand my toolbox as an evaluator — borrowing from different approaches and practices as needed. I entered the program with the following question in mind:

How can emergent learning be leveraged in my own evaluation practice to advance experimentation and ongoing learning across a diversity of stakeholders?

I was looking for a way to tighten the connection between evaluation, learning, and strategy. During the time I was enrolled in the program, I was able to apply the tools and concepts I was learning to an evaluation of a multi-site initiative designed to advance health equity through resident engagement. This work was emergent. The foundation and its partners were learning along the way and our thinking about how to define progress was also evolving as we continued to learn from the communities and their residents. In my mind, this was the perfect opening to apply the emergent learning framework and tools.

Here’s what I learned by applying emergent learning tools to my work:

Lesson 1: Applying line of sight thinking when developing or revisiting a theory of change can highlight areas of ambiguity and surface assumptions embedded in our thinking.

In emergent learning, we refer to line of sight as a way to maintain an unobstructed view from strategies to the ultimate outcomes we desire. By asking questions such as — What will this strategy make possible? Or What will it take to get there (desired outcome)? — we are making our thinking explicit and surfacing underlying assumptions about what we think is needed to reach our ultimate outcomes.

Similarly, in evaluation, we think of a theory of change as a snapshot of our best thinking at a point in time. It’s a platform to make our thinking visible and demonstrates how the strategies of an initiative or a program are connected to the desired changes we are hoping to see.

By developing a clear line of sight that illustrates how strategies are linked to desired outcomes, a group can stay focused on their collective vision for change as they continue to test and adapt their strategies over time.

In my work with the health equity evaluation, applying line of site thinking when revisiting the initiative’s theory of change, gave us the space to surface our initial assumptions and reflect on how these assumptions were changing based on what we were seeing in the communities. For more information on strengthening line of sight, check out Fourth Quadrant Partners’ Strengthening Line of Sight.

Lesson 2: Creating a forward-facing learning question can advance a group’s learning over time.

In emergent learning, we often create a question to help frame and focus our learning. The answer to this learning question is intended to accelerate the group’s ability to move towards their desired goal. Learning questions are typically forward facing (unlike evaluation questions which tend to be retrospective in nature) and invite the group to think together about how to tackle a specific challenge or achieve a desired outcome.

For the health equity evaluation, our forward-facing learning question was — What will it take to engage and empower residents experiencing health inequities to advance health equity in their communities? — Through the evaluation, we answered several retrospective evaluation questions designed to provide data about the various hypotheses that were being tested. The evaluation questions would evolve over time as the initiative’s strategies evolved, but all data collected through the evaluation was to inform the forward-facing learning question. Constructing a forward-facing learning question that was relevant to the group encouraged us to stay focused on our desired outcome as we explored new ideas for moving forward. For more on crafting learning questions, checkout Tanya Beer’s webinar on How to Ask Powerful Questions.

Lesson 3: Systematically applying Before and After Action Reviews can harness learning from one event to another, helping to ensure that the same mistakes don’t get repeated.

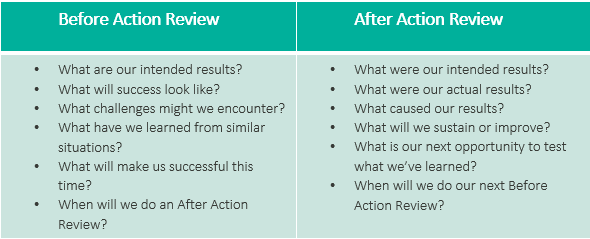

Before and After Action Reviews (BARs and AARs) offer a set of questions to help groups learn iteratively and improve results over time. For more information check out Four Quadrant Partner’s Introduction to Before and After Action Reviews (BARs and AARs).

As part of this initiative, the funder convened community stakeholders at multiple points during the year to promote information exchange, peer learning, and networking. At the time of these convenings I was still in the process of figuring out the value of these reviews — at first glance, the questions seem almost too simple. Upon review of my own AAR notes taken after the first convening, I noticed that some of the shortcomings of the second convening could have been prevented if we had systematically conducted these reviews. Many of the ideas brought up after the first convening around — What will make us successful next time? — were not implemented. This demonstrated to me how easy it is for learnings to get lost from one event to another.

Oftentimes our best ideas flow freely after a shortcoming or failure. However, over time those lessons tend to lose salience.

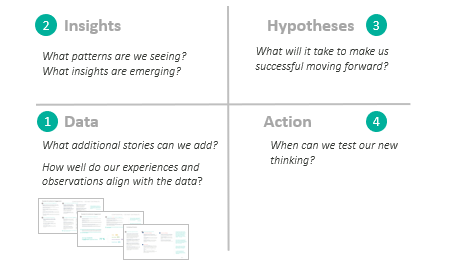

Lesson 4: Using the Emergent Learning Table as a platform for data interpretation helped move the group from data to insights to action.

The emergent learning table provides a platform to facilitate a group of stakeholders through a process designed to 1) reflect on data, 2) generate insights grounded in data, 3) establish hypotheses based on the insights that were generated, and 4) move towards action. For the health equity evaluation, I used a combination of data placemats and an emergent learning table as part of the data interpretation process with foundation staff, community coaches, and members of their technical assistance team.

This combination created a space for stakeholders to digest the data, ask questions, share experiences, recognize patterns, and generate insights for moving forward. This process facilitated the connection between evaluation and learning.

The collaborative nature of the conversation and involvement of multiple stakeholders helped increase buy-in to new ideas that were generated.

For me, the learning took place when I moved away from the one-off applications of the tools across disparate projects and focused on one project to cultivate learning over time.

Over the course of the year I tested and applied various aspects of emergent learning. By integrating emergent learning tools and practices throughout the health equity evaluation, I started seeing the through-line across these applications. My breakthrough moment came when I facilitated a data interpretation meeting using the emergent learning table as a platform for discussion.

Moving Forward…

How can emergent learning be leveraged in evaluation to advance experimentation and ongoing learning?

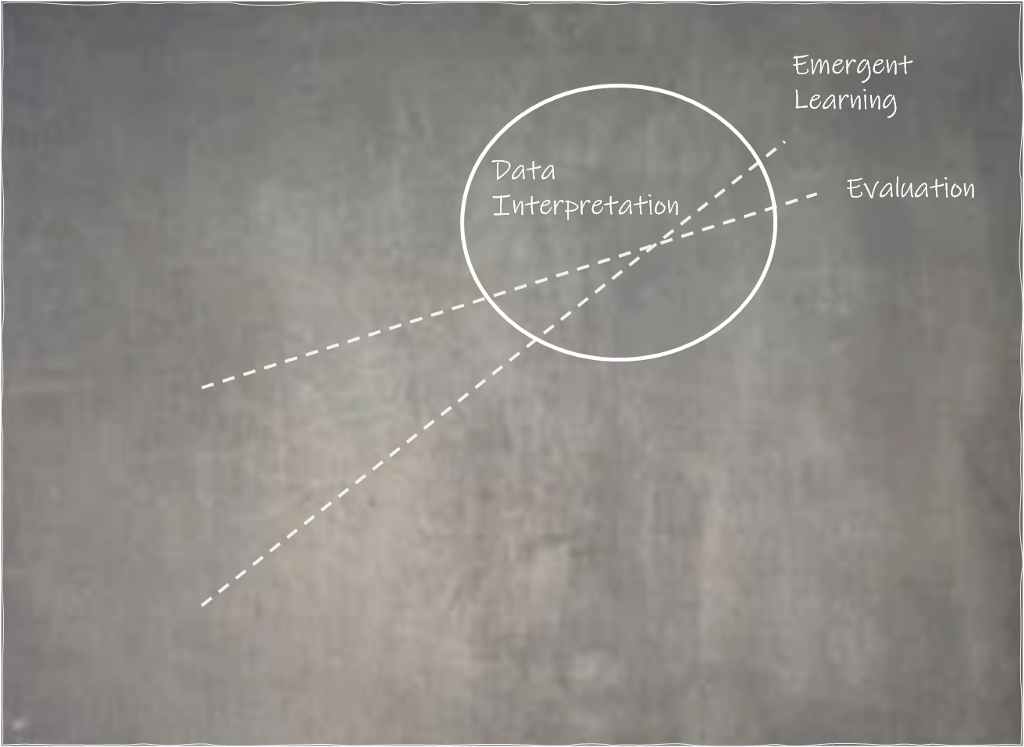

Take-Away #1: The true intersection between evaluation and emergent learning lies in the interpretation of data and its use for reflection and learning.

The emergent learning table demonstrated the power of inserting real-time, collaborative reflection into the evaluation sensemaking process. Facilitating stakeholders through the sensemaking process, creating a space for digestion, reflection, and the generation of new ideas helps connect evaluation to learning and action.

Take-Away #2: A forward-facing learning question encourages the exploration and testing of new ideas, while keeping the ultimate goal in mind.

This helps ensure that the evaluation is focusing on questions that matter and inform the collective learning of the group. All too often in multi-year evaluations, the questions that are developed at the beginning of the evaluation become outdated and irrelevant over time. When this occurs, there’s a disconnect between the data being collected and the decisions that need to be made to inform strategy.

Take-Away #3: Building in opportunities for reflection and sense-making throughout the evaluation creates a reflective practice that accelerates the learning potential of the group.

Opportunities for reflection help to create touchpoints for learning and adaptation of strategy.

Take-Away #4: Involving different stakeholders in the sensemaking process invites a diversity of perspective that can strengthen insights and lead to new ways of moving forward.

Inviting a diverse group of stakeholders to the table and valuing their experiences and perspectives helps make evaluation less transactional and paves the way for transformation.

Emergent learning tools provide avenues to gather experiences, generate insights, and formulate new ideas in a way that encourages experimentation, learning, and adaptation. It further provides a platform that inspires collaboration and a diversity of thought and perspective.

When coupled with emergent learning, evaluation has the potential to accelerate learning, magnify impact, and move us closer to our collective vision.

Reflections on the Intersection of Evaluation and Emergent Learning was originally published in InnovationNetwork on Medium, where people are continuing the conversation by highlighting and responding to this story.