This is an Eval Central archive copy, find the original at evalacademy.com.

This article is rated as:

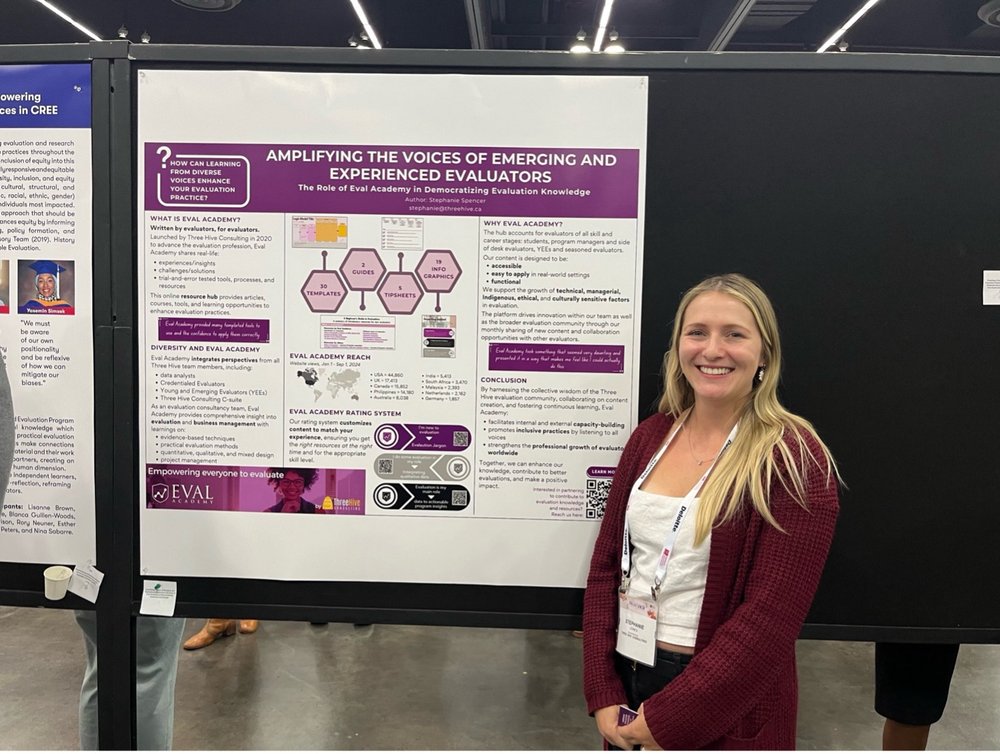

The 2024 American Evaluation Association (AEA) annual conference took place from October 21 to 26 in Portland, Oregon, marking my first experience at this event. This year’s theme, “Amplifying and Empowering Voices in Evaluation” connected with me because of its focus on the important role that diverse perspectives play in shaping effective evaluations from planning through to implementation and beyond. In this article, I’ll reflect on a few key takeaways that particularly resonated with my own evaluation practice—and hopefully yours as well!

Understanding the Evaluation Landscape

One of the first activities I participated in at the conference was a deep dive into the evaluation ecosystem in North America, to support young and/or emerging evaluators in making sense of the evaluation landscape. Tools like Eval Youth North America’s Kumu map and the periodic table of evaluation by Sara Vaca (2024) are valuable frameworks for both new and seasoned evaluators, helping us navigate the array of evaluation capacity-building resources available and start to make sense of the frameworks available. These tools serve not only as guides but also as reminders of the interconnectedness within our profession.

However, diversity within the evaluation sector also reveals challenges. The lack of standardized job titles and definitions—such as “evaluator,” “data analyst,” and “impact strategist”—can create confusion and hinder collaboration. This highlights a pressing need for clear communication and shared understanding among evaluation professionals. By developing a common language, we can foster better partnerships and streamline our practices.

How does this impact me as an evaluator? Reflecting on these discussions, I’ve realized how important it is to clearly explain my role as an evaluator—not just to new clients, but also to friends, family, and other professionals! By sharing what evaluation is all about and how it makes a difference, we can help demystify our work and raise awareness of its value.

Professionalism and Identity in Evaluation

Discussions around professionalism were prominent throughout the conference. The exploration of competency frameworks from the AEA and the Canadian Evaluation Society (CES) prompted important questions: Should evaluators aim to be generalists or specialists? Is advanced formal education, such as a PhD, necessary for success in this field?

While formal training can enhance skills and knowledge, I believe the core of effective evaluation lies in our ability to engage thoughtfully with diverse contexts and perspectives. This raises the issue of positionality: recognizing the biases and experiences that shape our work. By acknowledging our identities, we can enrich our evaluations and better serve the populations we assess. To learn more about biases, check out our Eval Academy article “Beyond Biases: Insights for Effective Evaluation Reporting”.

How does this impact me as an evaluator? I’m committing to being more open and transparent about my own biases in evaluations. Rather than viewing these biases as solely negative, we should also see them as strengths that can enrich our understanding of the context and results. By acknowledging positionality and learning to overcome these biases, we can better engage with the communities we work with and ensure their voices are heard. For example, this could involve seeking collaboration with others or revisiting methods to make them more participatory. At the same time, I recognize that there may be evaluators who are better positioned to navigate certain contexts or perspectives within projects. In my work as an evaluator on a consulting team, actioning this would include discussing these perspectives at the team development phase in an RFP. This approach will enhance the quality of my work while fostering a more inclusive evaluation process.

Engaging Program Participants: Centering Their Voices

A critical takeaway from the conference for me was the imperative to center the voices of program participants in our evaluations. Often, we become so focused on methodologies and frameworks that we lose sight of the individuals behind the data. Creative engagement strategies, such as using comic strips or storytelling to include participants in making sense of it all, can make evaluations more relatable and impactful. This includes ensuring that participants are fairly compensated for their time. You can read more about incentives for participation in evaluation in our Eval Academy article.

How does this impact me as an evaluator? In our complex world, it’s essential for evaluators to incorporate the voices of all partners, including those of the program participants, from the start to generate meaningful insights and drive real change. We should do a better job as evaluators to make space and time for this level of engagement where we can ensure that participant voices not only inform our evaluations but also shape the planning and process itself. For example, at Three Hive Consulting, we invite diverse perspectives to an evaluation committee that acts as a working group to help plan and support the evaluation. By prioritizing their perspectives, we can create more meaningful and resonant evaluations that truly reflect the experiences of those we serve.

Embracing Technology: Tools for Modern Evaluation

The integration of technology into our evaluation practices was a BIG theme at the conference. AI applications like ChatGPT for data synthesis and tools like DALL-E for creative visualization present exciting possibilities for enhancing our work. However, it is important to remember that technology should augment human connection, not replace it.

The challenge is to balance the efficiency that technology provides with the essential human touch necessary for meaningful analysis. We need to ensure that our evaluations capture the nuances of human experience, allowing us to truly make sense of the data. You can read more about the use of AI in evaluation in our article “Using AI to do an environmental scan”.

How does this impact me as an evaluator? I’ll continue to experiment with ways in which AI can support our evaluations while also creating space for reflection on this work. It’s important to share our experiences—what works, what doesn’t, and the lessons learned along the way—with our colleagues and others in the field. By fostering open conversations about the integration of technology, we can all contribute to a collective understanding of best practices and potential pitfalls.

Building Trust: The Foundation of Successful Evaluation

Over the course of the conference, the topic of trust emerged as a key element for amplifying and empowering voices in evaluation. There was lots of discussion around strategies for cultivating trust with participants and clients through open communication, flexibility, and a commitment to learning. The “triangle of trust” framework which was shared in a presentation by the Laudes Foundation and Convive Collective—anchored in authenticity, empathy, and sound logic—provided a useful lens through which to view our interactions as evaluators.

As evaluators, we often find ourselves in contexts where trust in both the evaluation process and the program itself may be fragile. Acknowledging this reality and engaging in open dialogue with clients is essential. Before delving into the specifics of evaluation, we must take the time to understand our clients as individuals and maintain that relational focus throughout the evaluation process. Slowing down and recognizing our shared humanity can also enhance our interactions. By fostering relationships that encourage vulnerability, we can create a more collaborative atmosphere, leading to richer, more insightful evaluations. Ultimately, this commitment to relationship-building not only strengthens our work but also serves the broader purpose of elevating the evaluation profession as a whole.

How does this impact me as an evaluator? Building strong relationships with clients and participants is essential. We can achieve this through simple actions, such as asking about their weekends before diving into planning or data collection, inquiring about what truly matters to them in the evaluation, and discussing how they define success. Additionally, completing capacity building before launching an evaluation and seeking feedback throughout the evaluation process, not just at the end, helps ensure our work aligns with their expectations.

Conclusion: Looking Ahead

Reflecting on my time at the AEA conference, I feel energized by the diverse community of evaluators dedicated to improving our practices. Looking ahead, I’m excited to prioritize collaboration, creativity, and inclusivity in my work. By centering the voices of program participants and combining our skills with rapid technological advancements in AI, we can continue to ensure our evaluations not only measure impact but also inspire positive action.