This is an Eval Central archive copy, find the original at evalacademy.com.

This article is rated as:

Much like you could argue that research and evaluation are related, perhaps members of the same family tree, I like to think of Implementation Science as a distant cousin of evaluation; one that comes for a really fantastic visit once in a while.

I was granted the opportunity to take a course a couple of years ago that opened my eyes to the world of Implementation Science. The course introduced me to new approaches, frameworks, and tools that can be used in evaluation.

So, what is this world? Let’s start with a definition and some background. I think you’ll begin to see why Implementation Science is a great relative of evaluation.

What is Implementation Science?

Implementation Science is a field that examines the methods and strategies that enable the successful implementation of practice. It was only established in the early 2000s as a response to the too-common gap between best practice research and behaviour change.

Do we all know the story of handwashing? You can read a summary of it here, but quickly: Ignaz Semmelweis made the connection between handwashing and deaths on a maternity ward in 1847, but Semmelweis was ineffective at communicating and spreading his learning (i.e., no behaviour change was implemented!) It wasn’t until the 1860s-1880s when germ theory was established that handwashing became more commonplace and mortality rates decreased. Twenty years of unnecessary death. So, what was the problem? Implementation Science tries to answer that.

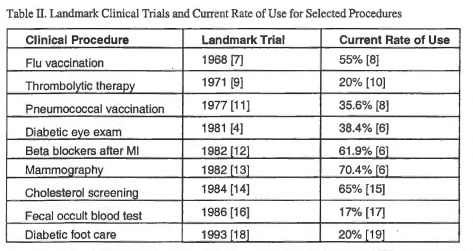

While the handwashing story sounds like a story that would happen in the 19th century, this persists today. The research community and academia are regularly determining the efficacy of treatments and interventions that are not spread to common practice. It’s now a commonly cited fact that it takes 17 – 20 years for clinical innovations to become practice. This paper written in the year 2000 shows how long it took for some effective therapies to reach even minimal rates of use:

Implementation Science was developed as means to address this research/practice gap.

So, when a new, evidence-based program or intervention is designed and is ready to be operationalized, Implementation Science directs you to focus on how best to do that:

-

Which stakeholders should you engage?

-

What barriers or obstacles can you anticipate and mitigate?

-

What enablers can you put in place?

-

How can you be sure the program is implemented with fidelity?

-

How can you implement in a way that promotes sustainability, or can uncover lessons for spread and scale?

Implementation Science aims to bridge the gap from what you know to what you do and offers frameworks and structure to do this.

Now, despite being a relatively young field, there’s still a lot to dig through in Implementation Science. There are whole courses on Implementation Science (like the one I took) and it even has its own journal. I’ll focus on how it relates to evaluation and why you might use it, after just a little more context.

A Snapshot of the Implementation Science Toolbox

Implementation Science has an overwhelmingly large toolbox. There are many, many frameworks, models, and tools that can be applied in various contexts. I’ll summarize just a few, that are likely the most relevant to evaluation. I’m sharing some links that will lead you to more details if you want to dive in. After a brief description of a few models, I’ll follow with some real-world examples.

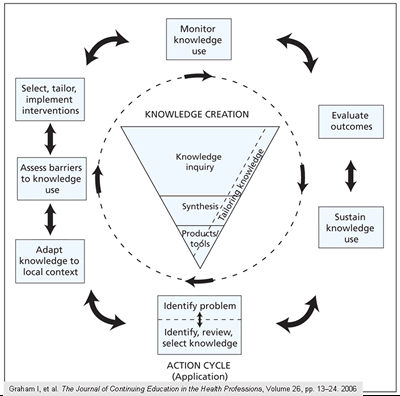

1. Knowledge to Action:

A process model used to describe and/or guide the process of translating research into practice. It has been adopted by the Canadian Institute of Health Research (CIHR) as a core component of their Knowledge Translation.

2. Determinant Frameworks:

Describe general types of determinants that are hypothesized to influence implementation outcomes (e.g., fidelity, skillset, reinforcement).

-

PARIHS (Promoting Action on Research Implementation in Health Services) uses the Organization Readiness Assessment to explore identified determinants.

-

Theoretical Domains Framework (TDF) integrates several theories into 14 core domains.

-

CFIR: (Consolidated Framework for Implementation Research) is a practical guide for assessing barriers and enablers during implementation. CFIR has a website dedicated to its use that includes guidance for use in evaluation, and a question bank.

3. Classic Theories

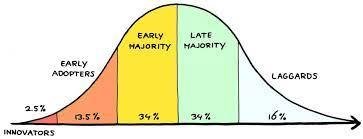

Rogers’ Diffusion of Innovation was one of the first theories to suggest that implementation, or diffusion of behaviour change, is a social process.

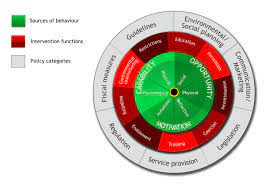

COM B (Capability, Opportunity, Motivation, Behaviour) uses a behaviour change wheel to support design of interventions.

NPT (Normalization Process Theory) aims at assessing how behaviour change is embedded into regular routine. It includes a 16-item assessment scale centered on four core constructs.

Obviously, that was a pretty high-level run-through of just a few models, but all those links will give you more detail should you wish to learn more about any of those models.

Where does evaluation fit into all of this?

When you read those questions posed above about how to implement a new intervention, did it make you think of anything in evaluation? It did for me! FORMATIVE EVALUATION! When it comes time to ask your formative evaluation questions, Implementation Science can be a great guide.

When you think about conducting formative evaluation, aside from “what’s working” and “what’s not working”, it may be difficult to ensure you are asking questions about the right factors (or determinants!) that may impact successful program implementation. Much like RE-AIM provides structured guidance to ensure you pay attention to five core domains of public health interventions, these Implementation Science models, frameworks, and tools are offering us tips and tricks about potentially overlooked factors that contribute to program success or failure – things that we can be evaluating.

Let’s use CFIR as an example because it’s my favourite. I have often navigated to their question bank and asked myself, “Do my evaluation plan and data collection tools collect enough information to be informative about….”

-

the intervention itself?

-

How might the strength and quality of the evidence for the intervention impact the outcomes I am evaluating?

-

-

the external context (or outer setting)?

-

How might policies or incentives impact fidelity to the intervention?

-

-

the inner setting?

-

How might team cultures or readiness for change affect the speed in which we expect to see the outcomes met?

-

In a recent project, the CFIR guide helped me to think about the importance of having a champion of their initiative. Without a champion in the project, who is passionate and promoting the importance of the work, this side-of-desk, in-kind-funded program could easily lose momentum. So, in a pulse survey tool I had created for the operational team I added a question asking the team if they felt they could identify a project champion. I’ve used the answers as indicators to discuss with the team whether the project is on track and adequately resourced.

Normalization Process Theory (NPT) is another one I’ve used. When an apparently great intervention isn’t getting any traction or is failing to spread or scale, why is that? It wouldn’t be out of scope to get an evaluator to help to answer that question. NPT offers guidance about where to look. Can you assess or measure:

-

Coherence: does the team understand the intervention?

-

Cognitive Participation: is there sufficient direction and messaging to support this intervention?

-

Collective Action: is the team empowered to act? Do they have the right tools and resources?

-

Reflexive Monitoring: what are the team’s reactions to doing things differently?

On a project evaluating implementation clinical pathways in primary care, NPT helped to guide evaluation of the mental models of physicians. That is, how do physicians normally implement pathways: what enables use and what poses a barriers? The four core constructs of NPT helped to ensure we were evaluating actual behaviour change.

The distinction between formative (or process) evaluation and Implementation Science is blurry, for me anyway. I think there’s some evidence to say I’m not alone in this thinking. Implementation Science even claims RE-AIM as an implementation model, like here, here and here. But formative evaluation and Implementation Science are different. Formative or process evaluation aims to determine if an initiative is on track to meet outcomes, whereas Implementation Science doesn’t look at effectiveness overall, only of the implementation strategy. Implementation Science assumes the intervention is already evidence-based, proven best practice, whereas evaluation might be looking to build that evidence base.

I think being knowledgeable about Implementation Science can only make our evaluation work stronger. I don’t think we need to implement an entire framework with academic-level rigor for it to be useful. I like the “borrow and steal” approach, where I feel like Implementation Science is giving me insider information, pointing me to look at proven determinants of program success that might sometimes be overlooked in our traditional evaluation frameworks.

Do you have other fields that you like to borrow and steal from? Let me know what they are!