This is an Eval Central archive copy, find the original at evalacademy.com.

Recently, we at Three Hive Consulting used outcome harvesting as part of a developmental evaluation with an organization who builds connections and helps facilitate community change. As with most developmental and participatory techniques, using this method was a bit time intensive, but the results were worth it. Along the way, we realized that although there are research and examples about how to use this methodology, we wished we could find a real account of the ups and downs of implementing the methodology in a real world setting. Here we share how we used the methodology and what we wished someone had told us before we started.

What is outcome harvesting?

*Barbara Klugman, Claudia Fontes, David Wilson-Sánchez, Fe Briones Garcia,Gabriela Sánchez, Goele Scheers, Heather Britt, Jennifer Vincent, Julie Lafreniere, Juliette Majot, Marcie Mersky, Martha Nuñez, Mary Jane Real, NataliaOrtiz, and Wolfgang Richert

Source: https://www.betterevaluation.org/en/plan/approach/outcome_harvesting

To begin, let’s quickly review what outcome harvesting is. Outcome harvesting is a participatory evaluation methodology that was developed by Richard Wilson-Grau and colleagues*. In this methodology, change is monitored by collecting evidence of what has happened (gathering outcomes) and then looking back to understand how a program or intervention has contributed to these changes.

Outcome harvesting helps to understand what has happened due to actions taken in the past. It is particularly useful in programs or interventions which are targeting community- or population-level changes or in complex situations where the change seen in the beneficiaries cannot be directly tied back to one action or program or actor. It is also useful when the goals of a program or intervention are broad and flexible; and thus can be a helpful tool in developmental evaluations, where the actions and intended outcomes may change over the course of the evaluation. The findings of an outcome harvest can be used to understand how a program or initiative contributes to change and can be used as a planning tool to course-correct or modify program approaches.

Who is involved?

A successful outcome harvest is a participatory process. By involving those who have experienced change and those who can use the findings, the outcome harvest will be more successful. There are three groups of people who need to be involved in the outcome harvest.

-

Informants: The people who were part of or who witnessed the outcomes.

In our case, these were the partners that the community initiative worked with.

-

Harvest user: The person using the findings to make a decision or take action. They will help guide the approach used to ensure the data they need is collected. Sometimes there are multiple harvest users (e.g. funding organizations and the funding recipients who provide programming).

In our case, the organization and the evaluation sub-committee was the harvest user.

-

Harvester: the person(s) leading the outcome harvest. They support the process and suggest strategies to improve the credibility and reliability of the data.

That’s us! Our role was to consider how to make the data tools, collection, analysis, and reporting processes as credible and rigorous as possible.

How did we do it?

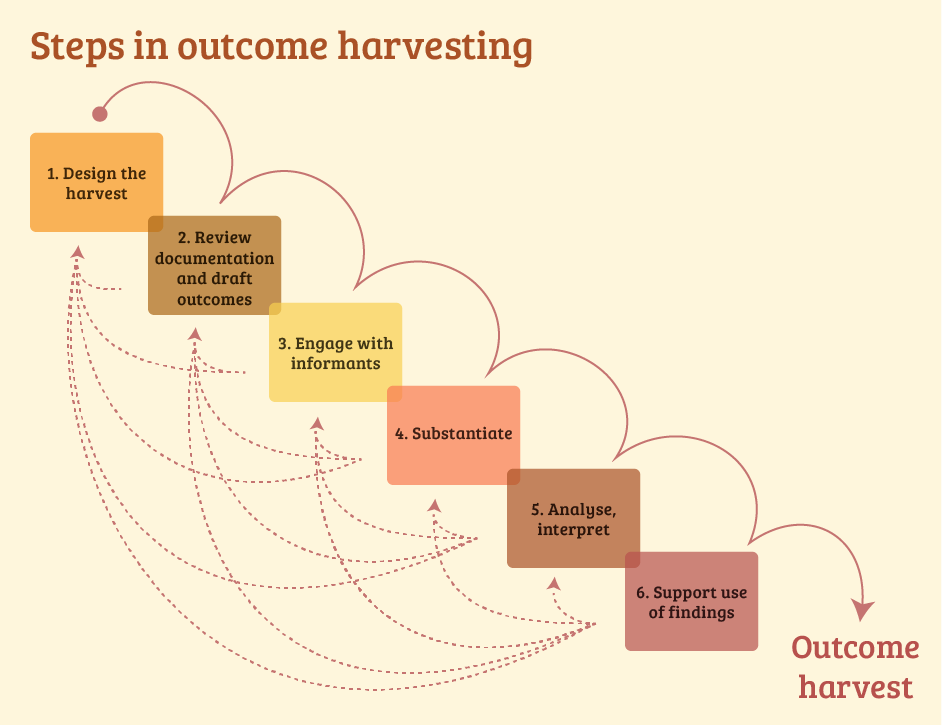

Steps in outcome harvesting: 1) Design the harvest, 2) Review documentation and draft outcomes, 3) Engage with informants, 4) Substantiate, 5) Analyse, interpret, 6) Support use of findings.

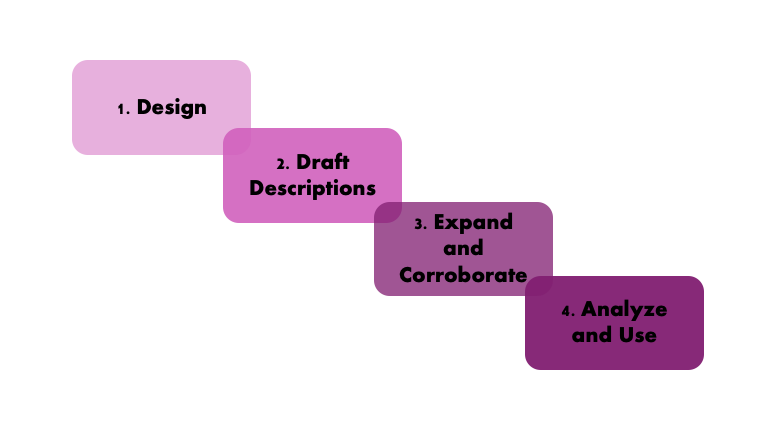

While Better Evaluation describes 6 main steps for outcome harvesting, in reality our approach had 4 simple steps.

Three Hive’s outcome harvesting steps: 1) Design, 2) Draft descriptions, 3) Expand and corroborate, 4) Analyze and use.

1. Design

In the design phase, the focus is on clarifying what the harvest user needs to know and how they want to use the information. This is basically the same as step 1 in the 6-step approach.

We asked: What activities, events, or programs has the organization contributed to? How has the organization contributed? What is the impact of these activities, events, or programs? Who did they impact?

2. Draft outcome descriptions

Typically, this is done by the harvester (evaluator) through document review. The organization we were working with was not a direct service provider and for many outcomes there was little documentation to review. So, we started with the organization rather than with the informants. We reviewed what documents were available, but also invited the organization to list activities, initiatives, and outcomes they helped to contribute to. The organization also provide us with the contact information for each of the partners (informants) who were involved.

We ended up with nearly 30 outcomes and almost 20 partners.

3. Expand and corroborate

Because we started with the organization drafting outcomes, the next step in our approach was to connect with the partners listed in step 2 to hear what they thought about the outcomes the organization generated.

In a short interview we asked the partners:

-

“What have you worked on with the organization?”

-

“The organization identified that you worked on X together, can you tell me a bit more about that. How did the organization contribute?”

-

“What was the significance of that event/activity/program/collaboration?”

-

“What impact do you think it had on you/the community?”

If the partners’ accounts differed from the outcome description, we made sure to probe further and seek additional sources of information. Some partners suggested additional partners who were also involved in the outcomes that we then reached out to.

4. Analyze and use

Finally, the data analysis and ensuing conversations were part of using the findings. We classified the outcomes based on the organization’s priority areas where they aimed to make change. In our case, collecting information about the outcomes was a tool in itself to engage the partners and have them reflect on what they have accomplished with the organization. Discussing the outcomes that were achieved also helped the organization to identify that they needed to do some further work to understand how their activities were linked to outcomes (i.e., logic modelling). We also recognized that the outcome harvest had not captured the community members’ perspectives on the outcomes. We used the stories of most significant change technique to gather participants’ perspectives on the activities and events and compared the outcomes and findings from the two techniques.

What did we learn?

Through this outcome harvest, we learned a lot of important lessons about using this method effectively.

-

Clear guiding questions set you up for success. As the harvester, communicate the strengths and limitations of this method with your harvest user to help them understand what questions to ask.

-

Use multiple sources of information to collect and substantiate your outcomes. In our case, multiple partners often contributed to a single outcome. Asking all of them about their experience rather than just one provided a range of perspectives.

-

Get creative with how you collect and verify outcomes. Most examples of substantiating outcomes used surveys, which seemed too impersonal and quantitative for the level of understanding we were hoping for. Instead, we set up short interviews with informants.

-

Get both the organization and the informants’ point of view on the outcomes. Understanding both perspectives enriched our data. We also found some unintended consequences and negative feedback, which helped to provide actionable results.

-

This method takes time. While the phone calls with informants only took 20 minutes, it took 6 weeks to reach out to all of them. Additionally, it took 2-3 weeks to draft outcome statements with the organization.

-

Generally, people like sharing about the work they have done. As an evaluator (or harvester), this was a rewarding experience and helped us to better understand the organization and how they work.

-

The outcomes are limited to those that the organization and informants identify. A more diverse pool of informants leads to a wider perspective about the outcomes. Ask your informants if there is anybody else they think you should be talking with.

-

Sometimes the process is more important than the final reveal. The act of harvesting outcomes and the ensuing conversations with informants and users can inspire more action than the final report.

Hopefully this article has given you another perspective on outcome harvesting. It can be a powerful methodology to understand complex situations. Don’t be afraid to make the modifications necessary to best suit your harvest users and informants.

Sign up for our newsletter

We’ll let you know about our new content, and curate the best new evaluation resources from around the web!

We respect your privacy.

Thank you!