This is an Eval Central archive copy, find the original at freshspectrum.com.

So I decided to create what I hope will eventually become an ultimate list of evaluation blogs. Not just a boring bullet point list, but one that gives you a sense of the human being on the other side of the internet.

This post is designed to grow. Meaning I plan to come back and update it. There are already a bunch of evaluation blogs I know well that are not on this page yet. But give it time and they will be added.

This post is in three sections.

- Section I includes recommendations from the FreshSpectrum Panel of Experts

- Section II includes all of the bloggers currently part of the main Eval Central blog feed. I’ve pulled a quote from a post for each blogger, and subsequently cartoon illustrated the quote.

- Section III will eventually include other evaluation blogs.

If all goes as planned, eventually this particular blog post will get very, very, large.

Section I: Blog Recommendations from the FreshSpectrum Panel of Experts

Alright, so these recommendations come from my awesome panel of experts (which is still open for you to join).

Sue Sing Lim

One of my favorite blogs is “We All Count”, a Canadian evaluation firm, who provides useful and practical tips on how to implement equitable evaluation. The founder, Heather Krause, is very generous in sharing her experiences on her successes and challenges when she tried to implement that.

At We All Count, we agree that Big Data is a valuable resource but we think there are some very important concerns that Big Data alone won’t fix. We think that what’s really exciting about Big Data is the ability to combine the efficiency and power of large datasets with the intentionality of small, curated data samples.

Her blog posts stand out because I found her voice easy to understand and also practical. I don’t feel it is overwhelming or too abstract. I feel like I can take away or do something after reading them and this gives me a sense of empowerment.

Highly recommend!

Sue Sing Lim joined Kansas State Research and Extension (KSRE) SNAP-Ed program in 2016 in the role of program evaluator. She is responsible for designing evaluation plan, overseeing data collection, creating evaluation training and workshops, analyzing data, and creating reports to disseminate the results of program impacts.

Jon Prettyman

Many thanks to Chris for the opportunity to share (and the chance to add lots of new content to my feedly subscriptions)! I recently found Marcus Jenal’s blog, where he comments on complexity and its application in social change processes. It’s worth a look if you’re interested in applying complexity concepts to your evaluation practice.

I know that in complex systems things are never that neat and never linear causal – there is not one thing in one box that leads to another thing in another box or to an observed behaviour. Reality is messier. I also missed the dynamics in these diagrams – how are these structure created, how do they persist, how do they change?

Jon Prettyman joined the monitoring and evaluation team at Climate-KIC in 2019. He designs and manages evaluation strategies for the portfolio of systems innovation projects. Before joining Climate-KIC, he worked with Mercy Corps on efforts to use emerging technologies for evaluation.

Christina Gorga

While not entirely related to evaluation, I’m really digging data-based design that’s created across different platforms. Judit Bekker out of Budapest has been producing some killer work with Figma, Tableau, and Adobe Illustrator that gives me lots of inspiration for my own work and how to push beyond default settings. She also gives insight on web safe fonts for Tableau as well as color considerations for passing contrast ratio tests.

Using fonts in Tableau can be a tricky thing because only Tableau web safe fonts will show up the same for everyone. A web safe font is a font that is considered to be a ‘safe bet’ to be installed on the vast majority of computers. Every computer that has a browser installed has default fonts built in so that it can display the text on the web.

Christina Gorga is a data visualization designer and strategist in Booz Allen’s Health account. She has experience designing reports and interactive Dashboards for program evaluations, state healthcare agencies, HHS, CMS, and VA. She is also an active Tableau community member and loves training teams how to use it. You can reach out to her on LinkedIn or follow her on Twitter at @styleSTEAMed.

Marianne Brittijn

I follow Zenda Ofir, a South African evaluator based in Geneva (https://zendaofir.com/) who plays an active role in the South African Monitoring & Evaluation Association and blogs about current trends and debates in the sector. I discovered the quirky and wise developmental evaluator Carolyn Camman, based in Vancouver, through their Eval Café Podcast and only read their first blog post today (but have been following them on Twitter for a while).

Two – We have to do much more to display the full value of evaluation for a new era. As the COVID-19 pandemic races around the world, evaluation struggles for space. Research studies and data overwhelm, yet evaluation professionals and studies are not present at influential tables. We have fumbled in proving the value of evaluation for the challenges facing humankind. Let us do our best to show the value of evaluation once the immediate heat of the pandemic is over and we move into sense-making in a changed world.

Transforming Evaluations and COVID-19, Part 4. Accelerating change in practice

Evaluators interested in anything related to developmental evaluation and equity should pay attention to Carolyn (http://www.camman-evaluation.com/). I also love the ARTD blog (https://www.artd.com.au/read/our-blog/). They’ve been putting out highly practical content that speaks to how organisations can (and should) adapt their M&E during the COVID-19 crisis. The same is true for Feedback Labs (https://feedbacklabs.org/blog/).

Marianne Brittijn is a Monitoring & Evaluation (M&E) practitioner in the development and social justice sector. She conducts external evaluations (ideally participatory and developmental ones), develops organisational M&E systems and facilitates M&E training courses. In addition to her consultancy, she works as a part-time PMEL Officer for CORC and the South African Shack Dwellers International Alliance.

Section II: Eval Central Bloggers

All of the bloggers in this section can be followed via Eval Central.

In order to be part of the main Eval Central blog feed I require permission from the blogger. If you have a blog that you want included, you can submit it for consideration.

I also included myself, because it felt weird not to include myself. Although it also felt weird including myself. I ended up just highlighting a really old blog post (circa 2012).

Amanda Klein

It’s not always easy to measure the impact of family and community engagement efforts. Some aspects of education — like test scores or report card grades — (notwithstanding the wide variety of controversies around their use) are pretty straightforward to measure. They’re already quantified. They’re known entities. We can tell that story. But when we talk about measuring the impact of, say, a super successful family science night, our minds go blank.

Ann K Emery

We also wanted the information to be actionable (duh). We wanted to design a one-page meeting handout that was not only clear but would also give the leaders something to talk about together.

Ann W Price

That’s why I find logic models so darn helpful. They may be despised by some, but I believe they are despised because they are oftentimes overly complicated. (I certainly have been guilty of creating a few that were way too complicated myself). But I have experienced over and over again a situation in which the program staff and leaders just knew they could explain their program clearly. Until we went through a logic model process, and they couldn’t.

Betsy Block

Maybe because I’ve only ever seen hot air balloons from a distance, my memory of them leans towards vibrant orbs, sometimes illuminated, gracefully soaring in the air. I kept working through this image of the hot air balloon, and thinking about what goes into a successful flight. What came to mind was the construction of the balloon itself, how it is sewn, the importance of fabric; and that my personal mission is to be a weaver of a fabric for a stronger community.

Beth Snow

a lot of the value of clarifying a program theory comes from the process. Finding out that people aren’t on the same page as one another about what the program is doing and why, identifying gaps in your program’s logic, surfacing assumptions that people involved in the program have – all of this can lead to rich conversations and shared understanding of the program among those involved and you just don’t get that by handing someone a description of a program theory that was created by just one or two people.

Chris Lysy

The null hypothesis is immensely powerful. It doesn’t have to be proven, it just is.

You don’t have to explain why you’re using Word to write a report or Power Point to give a presentation. You don’t have to explain why you present at conferences or write for a journal. They are already accepted, they are the null.

Creative approaches are never the null.

Carlos Rodriguez-Ariza

This moment is also a challenge for those of us who work in evaluation functions in the field of international development, where we generally have the luxury of time, operate within clear theories of change, and do our best work when we can mix methods, use multiple data sources and conduct in-depth interviews with a variety of stakeholders .

[translated from Spanish]

Carolyn Camman

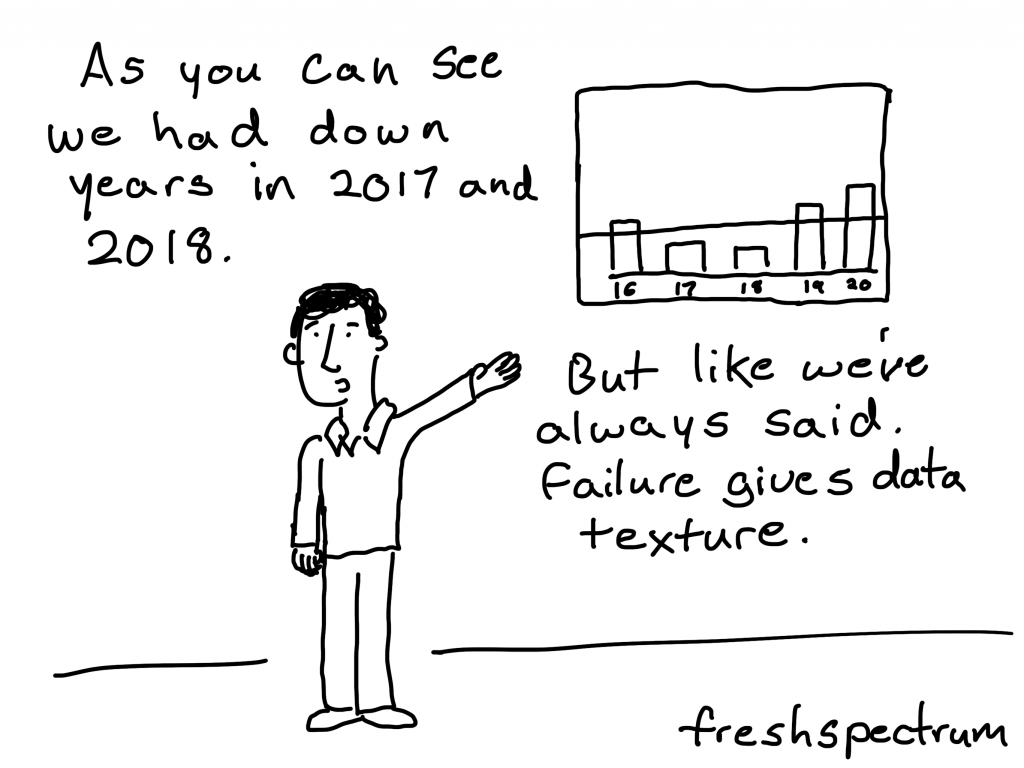

About a year ago I was chatting with someone who had been learning to work with wood. He said found it powerfully healing because you do make mistakes and you can’t reverse them. The mistakes become part of it. You just keep going. I’ve also been taking improv classes and learning the same thing. Whatever happens, you work with it. Fix it by moving forward, not by trying to roll it back and erase it.

Cameron D. Norman

Health. Lastly, how well are we? When the effects of being inside, isolated, and perhaps exposed to a virus are real, present and pervasive, your audience might not be in the state where the depth and quality of thought are what we need to get the responses we want. Many of us are not our usual selves these days and our responses will reflect that.

Dana Wanzer

RoE is not conducted for the sake of conducting it, nor is an evidence base of research important unless it is useful and used by the intended audience—in this case, practicing evaluators.

Elizabeth Grim

For four years I’ve been pondering – Who am I as an evaluator? Am I even an evaluator? Or am I a social worker with an evaluation and data-driven mindset? Can I be both? Am I a policy analyst who implements data-driven approaches? Am I an advocate that uses data to drive change? How many hats can I wear before my professional identity is so dispersed that it is nonexistent? Does claiming a professional identity even matter?

Eval Academy

Recently I was asked by a client about an evaluation literacy course for its board. The client’s board members had just attended a strategic planning day and through that discussion felt they needed education on evaluation and metrics. On one hand I thought “bravo, they want to know more about evaluation!”; on the other hand I thought “shit…., I’ve totally failed them as their evaluator – what have I been missing?”

Strategic Learning and Evaluation – What Boards Need to Know

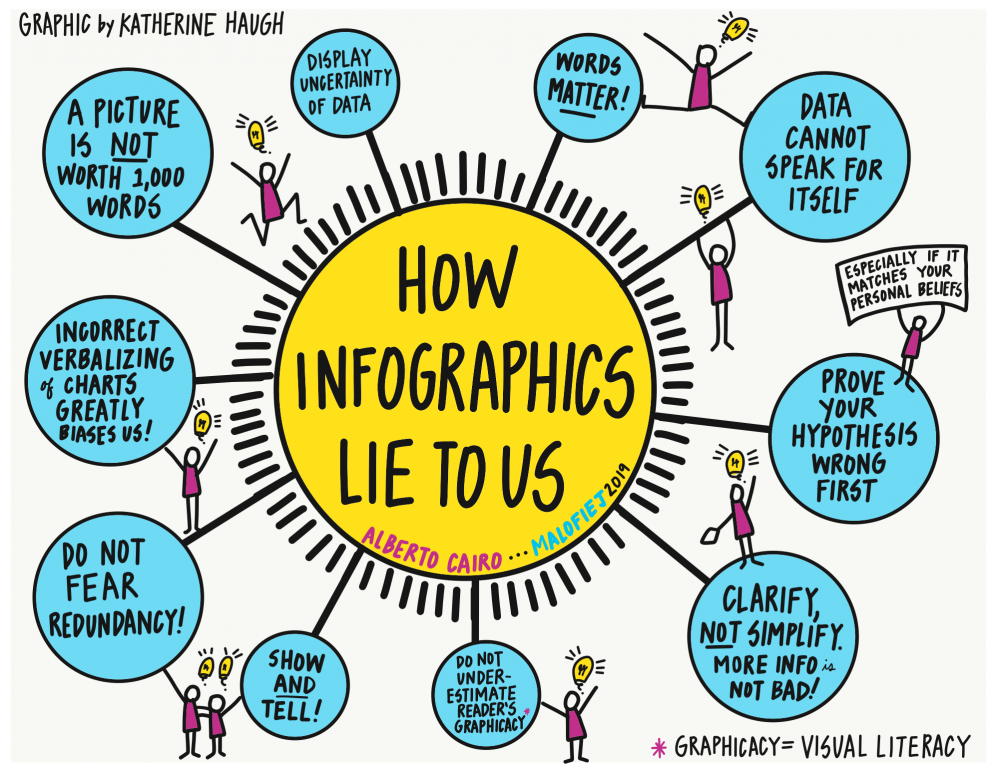

Katherine Haugh

Michelle Molina

Having a clear understanding of the purpose of the sort of data you are collecting will help you focus on the type of data you should be collecting and the sorts of conversations you should be having when you are making sense of that data.

Nicole Clark

Before transitioning, I knew what the end game was: to live life on my terms and help organizations raise their voices for women and girls of color. Once that transition happened, it became harder to stay motivated because everything I was doing was for someone else.

What I’m learning along the way is that it’s ok to question a dream. Which can be difficult when you’ve had a tunnel vision on that dream for so long. I’ve also learned that it’s ok to give yourself permission to try.

RKA

Numbers had their purpose (they are easily gathered and understood), but they have outlived their usefulness. The silver lining: with nary a visitor to count inside the building, is now not the perfect time to rethink and change how your museum measures success? How does a museum arrive at metrics that will stand the test of time?

Thomas Winderl

Instead of action language, use change language.Change language reports on the results of an action instead of the action itself.

An example of action language is:

“150,000 girls know how to protect themselves against HIV infection with the support of XYZ”

Section III: Other Evaluation Blogs

Alright, so I know that right now I am missing a bunch of evaluation world favorites. And I could bounce around from list to list finding and pulling them together for this post.

But I think blogs are better shared when they come with advocates. Either the bloggers themselves, or others in the evaluation community who love their work.

So if you want your favorites listed here, be their advocate and write a comment.

Like I said at the beginning of this post. This page is intended to grow.