This is an Eval Central archive copy, find the original at evalacademy.com.

Remember when you were a kid and you heard there was going to be a new kid at school? The news spread like wild fire. Students were excited and intrigued by this new person. When the new kid arrived at school, many were eager to get to know them and figure out if the new kid could be their new friend. The more reticent school kids might have hung back and instead heard rumors about who this new kid is and what they are like. Some of those rumours turned out to be true and some not so true.

Developmental Evaluation (DE) has been the evaluation world’s so-called new kid. Over the past ten years there has been excitement and intrigue around it. Some evaluators have explored DE as their new evaluation friend, while others remain unsure and intimidated by it. Just like the new kid at school, there remains misconceptions about what DE is and how it is actually practiced.

I have practiced evaluation for over ten years and throughout that time loosely thrown out the term “developmental” when describing an initiative. I have prescribed DE when it was not appropriate and I have not used DE when it was appropriate (I know I am not alone in this; I have heard many of you do it too!) DE has been overused and misconstrued so many times that now we are hearing, “but is it true developmental evaluation?”

I am now finally working on an evaluation that I believe to be true developmental evaluation (no really, I’m serious this time). The initiative and evaluation is in its early days. Yet, I have already had a very different experience than previous DE (and so-called DE) experiences and learned a number of lessons I’d like to share.

In this article, I will outline my six lessons from my DE experience, including why I think it is true DE, but before we jump into that let’s quickly review what DE is.

What is Developmental Evaluation?

According to the originator, Michael Quinn Patton, DE “supports innovation development to guide adaptation to emergent and dynamic realities in complex environments1.” Unlike more traditional approaches, it is embedded rather than detached, continuous rather than episodic, and has a goal of learning not judgment.

My favourite metaphor to clarify how DE differs from other traditional evaluation approaches is the following Bob Stake metaphor:

“when the cook tastes the soup, that’s formative; when the guests taste the soup, that’s summative….DE begins before cooking, when the chef goes to the market to see what vegetables are the freshest, what fish has just arrived and meanders the market considering possibilities of who the guests will be, what they were served last time, what the weather is like and considers how adventurous and innovative to be with the meal.”

Lesson 1 – Just because it is called DE, doesn’t make it so

In the past, I have worked on evaluations where stakeholders have said they wanted DE; however, as the work progressed it became clear it wasn’t. For example, they have stated the purpose for the evaluation was for learning, but then continued to focus on funder needs and accountability-type questions. Or, the initiative may have already developed its model and was really just looking to improve it, not necessarily to develop it. I’ve also worked with clients who say they want “rapid feedback” but then indicate that rolling up the findings into an interim and/or final report is how the data should be reported. Some clients insist on DE, but then want a traditional, static evaluation plan with retrospective evaluation questions. This is not DE.

My current DE experience was different from the start. It started with a RFP that identified the need for a developmental evaluator. While this is not unique, what was unique was how the RFP articulated details that aligned with DE. The RFP described the vision for its work and outlined a high-level idea of what is needed to get there, including the need for data to inform the development and implementation of its work. It also outlined related DE concepts like complexity and systems change. It did not focus on us relaying our understanding of the initiative and spelling out a detailed evaluation plan. After being shortlisted, our interview focused on DE concepts and our firm’s experiences with DE, particularly my knowledge and evaluator skills. That conversation really solidified that, if selected, it would be true DE.

Lesson 2 – Drink Through a Fire Hose

The J.W. McConnell Family Foundation and the International Institute for Child Rights and Development have published A Practitioner’s Guide to Developmental Evaluation in this guide they state:

“your effectiveness as a developmental evaluator is determined, in part, by how well you understand the initiative and the broader context in which it is situated.”

My client and I both understand this to be true. As a result, our first meeting didn’t have an agenda. The only purpose was having an unstructured conversation that went over the history of the initiative, where it is at now, what data has been collected, what questions they are struggling with, and where they think they might be headed. Through that discussion, we talked about various stakeholder groups, past conflicts that have arisen, and potential future conflicts that may occur. We recorded that session and I took oodles of notes that I still refer back to. During that conversation, there was reference to a number of documents that I combed through afterward to try and understand. It was like drinking through a fire hose, but slowly, after attending subsequent meetings and having multiple conversations, the stakeholders, language, questions, and broader context are beginning to make more sense.

Lesson 3 – Make Friends

A Practitioner’s Guide to Developmental Evaluation also says that the quality of relationships determines the degree to which a developmental evaluator can access information and influence change.

As an external consultant working virtually with clients, I cannot emphasize enough the importance of making friends (aka building relationships). But making friends takes time. Remember that new kid at school? It took a while to get to know each other and trust each other.

A couple of things I did to try and speed up that process was to set aside a good amount of time at the first meeting with our client’s entire team to get to know one another. And, you guessed it, we used good old-fashioned icebreaker activities! Part of one of the icebreakers was telling them a story about me that highlighted why I’m an evaluator and what evaluation is to me. We also went around and had them present an object, why it was special to them and how it reminded them of evaluation.

I also did a short presentation on DE to try and outline expectations for the evaluation and how it differs from traditional evaluation they might have been involved in previously. In that presentation, I focused on what it might mean for them. Here’s a snapshot of some of those slides:

Take note of the “Include me” one. I have found that because I am not on-site people can forget about including me in important conversations and meetings. One solution for this is having an insider – a key friend who keeps me in the loop on what is happening AND reminds others to include me.

Learning #4 – Capture the gold nuggets

While drinking through the fire hose and making friends you will likely hear a number of questions that your clients are struggling with. You may hear phrases like:

-

“how do we….?”

-

“wouldn’t it be interesting to know….?”

-

“I wonder if we….”

-

“I don’t know how we’re going to….”

-

“One of the things we need to figure out is….”

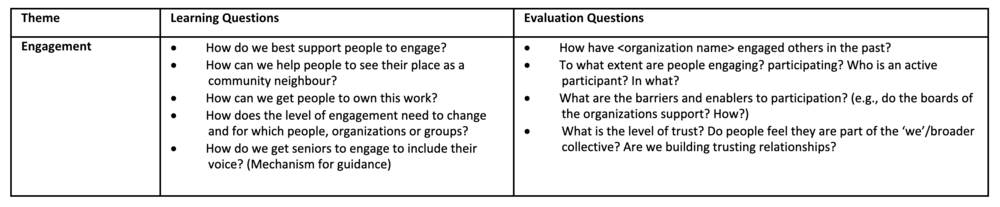

These are your gold nuggets. Pay attention to these nuggets and start capturing them. I often jot the questions down on my meeting notes, star them and then transfer them over to a question inventory. An abbreviated version is included below:

Sometimes you might need to dig for the nuggets. You can do that by posing questions like:

-

What questions are you struggling with?

-

What issue(s) need clarity?

-

Where is the energy and focus?

-

What activity are people most animated about?

-

Where are the quick wins?

Learning #5 – Try to organize the chaos

One of the key roles of a developmental evaluator is to help our stakeholders find their way through complexity. One way I’ve tried to do that is to take the list of questions I captured and work with the group to prioritize the questions we need answers to.

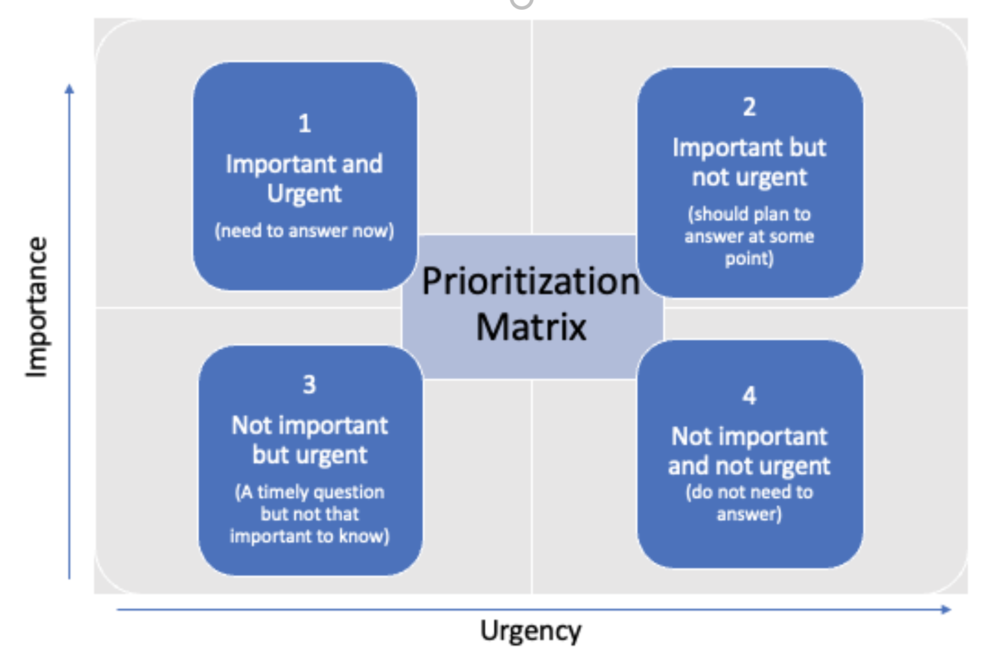

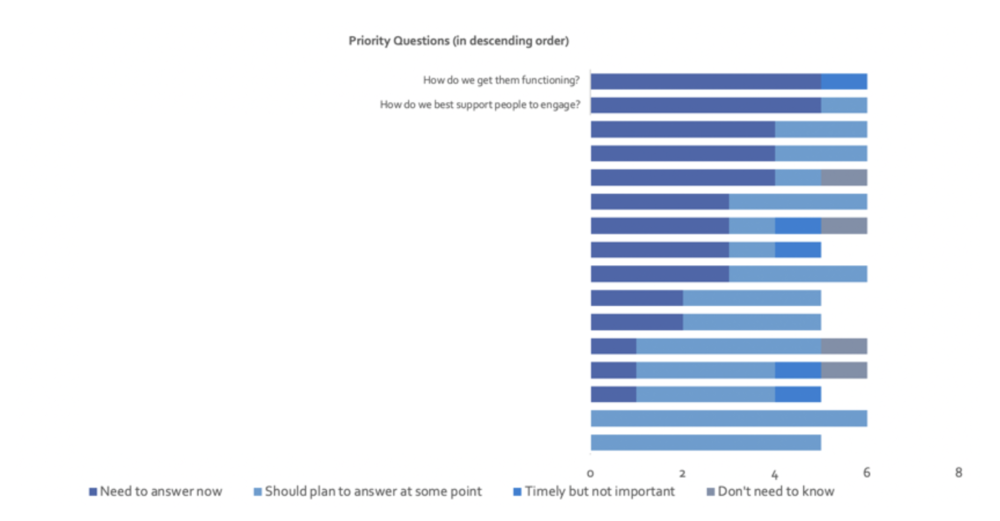

For this group, I asked which two questions we needed to answer in the next quarter. To identify these questions I uploaded the list of learning questions to our survey platform, Qualtrics, and then got them to rate each question according to urgency and importance. The questions were then analyzed and the two priority questions emerged. The figure below shows the matrix and the two priority questions (the remaining questions are blocked out from the graph).

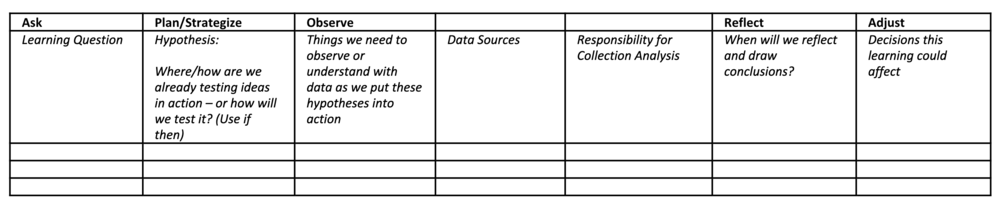

Now that we identified the two priority questions, we slotted them into a learning framework (see template below) and are beginning to answer those questions. Once we answer those questions the intent is to rinse and repeat (i.e. identify and prioritize the next questions to answer). The next questions might not even be on the original inventory – that’s the point of DE – to be responsive and adaptable as the initiative evolves and to uncover what you haven’t even considered before.

Learning #6 – Watch what you spend

Budgeting for DE and monitoring the costs is important. Prior to starting with our clients, we had an agreed budget and some loosely defined deliverables that would be included in that budget. However, if you do a good job of making friends and organizing the chaos you will find that your work will quickly snowball – pretty soon you will be included in every meeting!

My approach with this is to keep a close watch on what is being spent, update the client often on the budget, and discuss with them if/how to adjust their evaluation priorities and expectations. Another option would be to estimate the number of hours or FTE (if an employee) when scoping the project.

Those are my six lessons until this point. I am sure lessons seven through one hundred will emerge soon. Make sure to sign up for our newsletter so you don’t miss out!

Sign up for our newsletter

We’ll let you know about our new content, and curate the best new evaluation resources from around the web!

We respect your privacy.

Thank you!