This is an Eval Central archive copy, find the original at evalacademy.com.

Evaluations are inherently political, which means they are fraught with ethical choices and decisions along the way. There have been many instances throughout my career where I get that uneasy feeling, my gut talking to me and telling me to slow down and re-think what I am doing. I’ve learned that when I do, a devil appears on my left shoulder and starts yelling:

“WHAT ARE YOU DOING? YOU DON’T HAVE TIME FOR THIS! YOU’RE GOING TO GO WAY OVER BUDGET DOING THIS – KEEP GOING! SO WHAT IF YOU DON’T HEAR FROM THOSE PEOPLE? SO WHAT IF THAT PUTS THEM AT RISK?”

And then the angel on my right shoulder appears. Thank goodness! We all know from the movies that I’m supposed to listen to her. I slow down even more and listen intently:

“Do the right thing,” she whispers.

Uh-huh, okay. I keep listening for more….

That’s it?! What in the hell (excuse my language, angel), does that mean? In my evaluation experience I have been faced with numerous situations where I know I should do “the right thing,” but more times than not the decisions we face are not black and white or right and wrong.

For example:

….during evaluation planning, we don’t have the resources to answer all questions from all stakeholders – how do we decide how to focus the evaluation? Whose needs will we address and whose will we leave out? Is it appropriate to focus evaluation questions on funder needs and burden program staff and participants with collecting and reporting information that is not important or useful to them? Is it enough for funders to check the box and say, “the program was a success – good for us,” but has little benefit for program staff and participants?

….during data collection, we may be inclined to take the easier path to get the data we need to answer our questions. We may ask ourselves, “do I really need to include that group?” Trying to access that group and get their informed consent could burn a lot of time and resources. Is it right to exclude them and only provide the perspective of others?

….during analysis and reporting, what do we do if our stakeholders suggest we present findings in an alternative (i.e. more favourable) way? This happens far more often than I would like, but stakeholders are called stakeholders for a reason – they have a stake in whatever it is you are evaluating. We conducted an evaluation of a program a few years ago and from the start were informed that the livelihood of this program depended on favourable evaluation results. When we uncovered and reported what they perceived as negative findings, the stakeholders of course pushed back on those findings. As evaluators, we have a responsibility to present the data (both positive and negative); however, the reality is, anyone who has worked as an evaluator knows that data isn’t just data (yes, even quantitative data). As Michael Quinn Patton states in his book Utilization-Focused Evaluation, “data always requires interpretation. Interpretation is only partly logical and deductive; it’s also value laden and perspective dependent.” Conversations are never really about reporting if the results get presented, but more of a back and forth of how and to what extent.

So, what is an evaluator to do?

Here are some things that have helped me silence the devil on my left shoulder and figure out what my angel means:

Program Evaluation Standards

There are standards that have been created to help guide the way for evaluators. The Joint Committee on Standards for Educational Evaluation (JCSEE) developed Program Evaluation Standards that have been adopted by evaluation associations in the United States and Canada. The Standards provide guidance both for evaluators in planning and implementing their program evaluation projects, and for evaluation users in knowing what to expect from the evaluation process and products. Check out our free 6-page resource that provides evaluators with reflective questions for each of the Standards.

Alberta Innovates ARRECI

ARECCI Ethics Screening Process

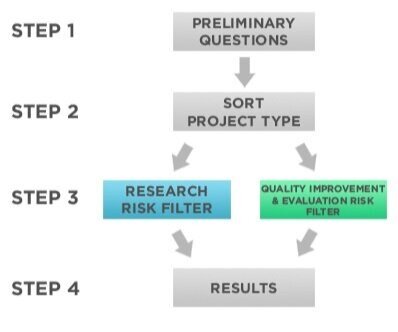

– Most researchers have access to a research ethics board to review and approve — or not — their research projects. However, evaluators are often turned away by research ethics boards – after all they are “research” ethics boards. Nonetheless, evaluation still involves people, information and potential risk to participants. In our home province, Alberta Innovates has created an ethical framework for evaluation and other innovation projects in Alberta. ARECCI stands for A pRoject Ethics Community Consensus Initiative. This collaborative initiative has developed a screening tool that helps identify what your project is (i.e. research, evaluation, QI) and an ethics guideline tool. Even if you are an evaluator outside of Alberta, these tools are useful for identifying risk to participants and helping you make decisions that will protect people and their information.

Evaluator colleagues and community

When you’re not sure, ask! At Three Hive, we are lucky to work on a team where we can bounce ideas and questions off each other. In addition, my business partner, Shelby Corley, is what Alberta Innovates calls a Second Opinion Reviewer, which means she has received additional training on reviewing projects for ethical risk and ways to mitigate risk. If you’re an independent consultant and don’t have your own in-house ethical angel, then reach out to the evaluator community. As you know, evaluators love giving recommendations! Start an #evalTwitter on Twitter, post a question on EvalCentral, or join some evaluation groups on LinkedIn and post your questions. While standards are useful, you will get far more real-world practical advice from your fellow evaluators.

If you’re still at a loss, sometimes you just have to re-visit that gut feeling. Maybe my angel wasn’t saying “do the right thing” but “do what feels right” (it is so hard to hear with that noisy devil sometimes!). As Ernest Hemingway said:

“So far, about morals, I know only that what is moral is what you feel good after and what is immoral is what you feel bad after.”

Sign up for our newsletter

We’ll let you know about our new content, and curate the best new evaluation resources from around the web!

We respect your privacy.

Thank you!