This is an Eval Central archive copy, find the original at freshspectrum.com.

Post originally published as a collection of 13 evaluation cartoons on March 25, 2014. Updated with way more context on May 27, 2020.

When you search “What is Evaluation?” on Google, you get the kinds of responses you might expect.

- The Wikipedia page…if a topic is important enough it will have a Wikipedia page. And whatever you may think about Wikipedia, it’s one of Google’s favorite sites.

- Dictionary pages. We did ask for a definition, right?

- Evaluation association definitions.

- Large government and NGO definition pages.

They are somewhat formulaic. Usually the definition is tied directly to a prominent author or theorist. Or it’s sourced to a recent article which sourced a prominent author or theorist. Then the organization expands upon that definition.

But why are you asking? Is a direct answer really all that important?

If it is, well then, here is a good one.

What is Evaluation?

Evaluation is the process of determining the merit, worth and value of things, and evaluations are the products of that process.

Now for the indirect answer, let’s dive deeper.

Evaluation can be hard to explain.

Back in 2014 the American Evaluation Association put together a task force with the purpose of defining evaluation.

The statement is meant to encourage dialogue — so based on comments and responses it will be revised periodically. Thus, your reactions and comments are encouraged (see comment section below).The Task Force was comprised of both long-time evaluation professionals and AEA members newer to the profession. All have experience and expertise in communicating to others about evaluation. The task force included:

Michael Quinn Patton, Chair, Edith Asibey, Jara Dean-Coffey, Robin Kelley, Roger Miranda, Susan Parker, and Gwen Fariss Newman

The Canadian Evaluation Society took a similar root to find their own.

(Through a reflective process, the CES Board of Directors, supported by a consultation of members, has crafted and adopted the following as the CES definition of evaluation. PDF version. Cheryl Poth, Mary Kay Lamarche, Alvin Yapp, Erin Sulla, and Cairine Chisamore also published Toward a Definition of Evaluation Within the Canadian Context: Who Knew This Would Be So Difficult? in the Canadian Journal of Program Evaluation, vol. 29, no. 3.)

For most of us “What is Evaluation” is an open question. It evolves over time and adapts based on context. But I am not sure evaluators would have it any other way.

Research vs Evaluation

From this perspective, evaluation “is a contested term”, as “evaluators” use the term evaluation to describe an assessment, or investigation of a program whilst others simply understand evaluation as being synonymous with applied research.

I’m pretty sure the Wikipedia evaluation page editor is trying to call us out.

But honestly, there are a lot of converted researchers in evaluation. And there are a lot of “evaluators” who are really just doing research.

I was a converted researcher. I saw the similarities in the methods and thought that it was pretty much the same thing. But it’s not what you do.

The how, where, who, and why really matter in evaluation.

Evaluation is not…

Research: The purpose of research is to generate new knowledge, while evaluation is about making evaluative claims and judgments that can be used for decision making and action

Figuring out the Type of Evaluation

Just like there is not one definition, there is not just one type of evaluation or one way to do an evaluation.

Evaluation can range from being very simple service evaluations to complex evaluative research projects. Each service will require a different approach depending on the purpose of the evaluation; evidence base, stage of development, context of the service; and the resources and timescales for the evaluation.

Evaluations can focus on implementation and learning (formative evaluation), how a service works (process evaluation) and whether it has worked (outcome/summative evaluation) – or all of these aspects over the life cycle of a project.

A lot of organization’s boil evaluation down to these three kinds of pursuits.

On BetterEvaluation, we use the word ‘evaluation’ in its broadest sense to refer to any systematic process to judge merit, worth or significance by combining evidence and values.

But there are all sorts of types of evaluation out there in the world.

Here are some thoughts on the topic by Emily Elsner, a current member of the freshspectrum Panel of Experts.

Michael Quinn Patton defines evaluation thus:

Evaluation involves making judgements about the merit, value, significance, credibility, and utility of whatever is being evaluated: for example, a program, a policy, a product, or the performance of a person or team.’

Evaluation seems to raise two assumptions in people: firstly, that there is an easy ‘off-the-shelf’ solution, and second, that evaluation is going to be critical and negative. The angle of judgement, and (as Patton elaborates in his book) the association of judgement with values, is a crucial aspect that can be forgotten. Yes, evaluation can be critical, but it can also provide strategic guidance, support decision-making, and more – all positive, useful things for projects and organisations.

Linked to this, if evaluation is to be supportive of projects and organisations, then it needs to be tailored to the project/organisation, otherwise it is just measuring for the sake of it, as Muller, in his book ‘The Tyranny of Metrics’, reminds us: ‘

There are things that can be measured. There are things that are worth measuring. But what can be measured is not always worth measuring; what gets measured may have no relationship to what we really want to know […] The things that get measured may draw effort away from the things we really care about. And measurement may provide us with distorted knowledge – knowledge that seems solid but is actually deceptive.

Emily Elsner is an impact and evaluation consultant based in Zurich, Switzerland. She has spent the last few years working in the migration-entrepreneurship-social enterprise space, and is now independent, balanced between the social and environmental spheres.

Overcoming the inherent tension between the evaluator and program being evaluated.

Who gets to say what works and what does not work? What does it all really mean? It’s definitely not hard to move back and forth between evaluation and philosophy.

Evaluation and evaluative work should be in service of equity.

Focusing on the Right Outcomes

We are what we measure.

UNEG’s definition of evaluation further states that evaluation “should provide credible, useful evidence-based information that enables the timely incorporation of its findings, recommendations and lessons into the decision-making processes of the organizations and stakeholders

The United Nations Office of Drugs and Crime – What is Evaluation?

Connecting the Dots

Evaluative reasoning is the process of synthesizing the answers to lower- and mid-level questions into defensible judgements that directly answer the high-level questions. All evaluations require micro- and meso-level evaluative reasoning… not all require it at the macro level.

Jane Davidson’s UNICEF Methodological Brief on Evaluative Reasoning

Making Comparisons

In its simplest form, counterfactual impact evaluation (CIE) is a method of comparison which involves comparing the outcomes of interest of those having benefitted from a policy or programme (the “treated group”) with those of a group similar in all respects to the treatment group (the “comparison/control group”), the only difference being that the comparison/control group has not been exposed to the policy or programme.

Systematic Assessment

Effective program evaluation is a systematic way to improve and account for public health actions by involving procedures that are useful, feasible, ethical, and accurate. Several key documents guide program evaluation at the CDC.

At various times, policymakers, funding organizations, planners, program managers, taxpayers, or program clientele need to distinguish worthwhile social programs from ineffective ones, or perhaps launch new programs or revise existing ones so that the programs may achieve better outcomes. Informing and guiding the relevant stakeholders in their deliberations and decisions about such matters is the work of program evaluation.

From Rossi, Lipsey, and Henry’s book Evaluation, A Systematic Approach

Evaluation is the systematic assessment of the operation and/or the outcomes of a program policy, compared to a set of explicit or implicit standards, as a means of contributing to the improvement of the program or policy.

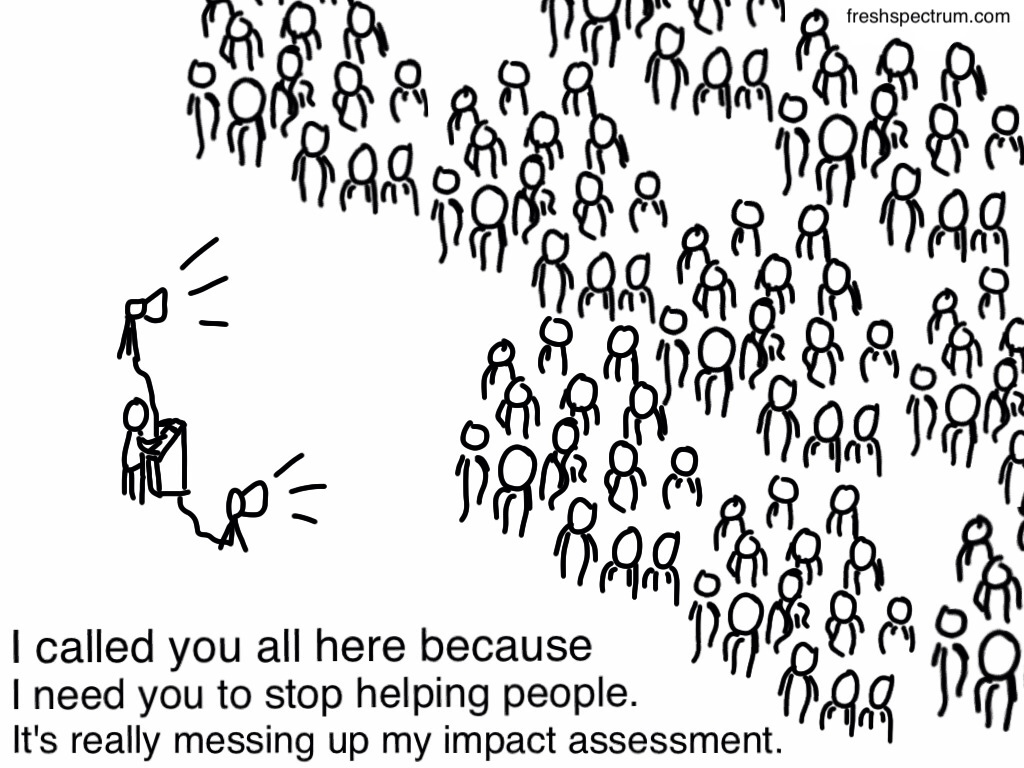

Impact Assessments

Impact analysis is a component of the policy or programming cycle in public management, where it can play two roles:

Ex ante impact analysis. This is part of the needs analysis and planning activity of the policy cycle. It involves doing a prospective analysis of what the impact of an intervention might be, so as to inform policymaking – the policymaker’s equivalent of

business planning;Ex post impact assessment. This is part of the evaluation and management activity of the policy cycle. Broadly, evaluation aims to understand to what extent and how a policy intervention corrects the problem it was intended to address. Impact assessment

focuses on the effects of the intervention, whereas evaluation is likely to cover a wider range of issues such as the appropriateness of the intervention design, the cost and efficiency of the intervention, its unintended effects and how to use the experience from this intervention to improve the design of future interventions.

We need to accept the fact that what we are doing is measuring with the aim of reducing the uncertainty about the contribution made, not proving the contribution made.

John Mayne Addressing Attribution Through Contribution Analysis: Using Performance Measures Sensibly

Want more evaluation cartoons?

If you were brought here because of the evaluation cartoons, you can find lots more by checking out the following post: 111 Evaluation Cartoons for Presentations and Blog Posts. The post will also provide you with information on licensing terms and use.