This is an Eval Central archive copy, find the original at evalacademy.com.

This article is rated as:

Designing a quality survey isn’t as easy as it seems. I’m sure we’ve all been asked to take surveys that frustrate us for one reason or another. Maybe the answer we want to choose isn’t available, the questions are confusing and poorly worded, or maybe it’s just too long and tedious.

Understanding what can lead to respondent frustration and mitigating that risk is one way to ensure your survey will get answered! One of the common sources of frustration is when the response you want to give isn’t available to you. This is where options like “Not Applicable”, “Don’t Know”, or a neutral response come into play. Let’s delve into when and how to use these options effectively.

Not Applicable

The “Not Applicable” option (often abbreviated N/A or NA) is useful when a question might not pertain to all respondents. Including a “Not Applicable” option can prevent respondents from abandoning the survey or providing an inaccurate response. Having a “Not Applicable” option, as opposed to leaving a question blank, is clearer when you are analyzing the data. Blanks are difficult to interpret: were the response options not adequate? Did they miss the question? Was the wording confusing? N/A gives you clarity when you are interpreting results.

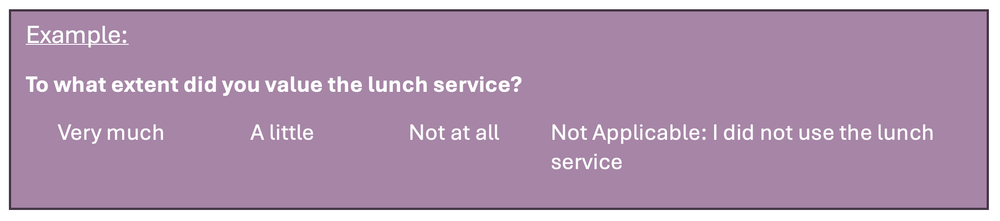

To add even more clarity, you can add a description to the N/A option to ensure respondents understand what it means to select it:

You could also provide an orientation in your instructions, e.g., “If you did not use the service listed, please select ‘Not Applicable’.”

If your question type uses a Likert scale, typically, an N/A option would be outside of the Likert scale. That is, if your scale is 1 – 5, N/A would be a 6th option.

An option to avoid using a “Not Applicable” response is to use skip logic in a survey. First, ask your respondents IF something applies, e.g., “Do you do this, do you own this, do you have experience with this” and IF yes, ask questions about feelings/experience and IF no, skip to the next question. This is easier to do in digital survey formats than paper formats, which can be confusing.

Another consideration when using “Not Applicable” is data entry or data coding. You likely want to be able to differentiate “Not Applicable” from blank responses and even neutral responses.

Don’t Know/Unsure

The “Don’t Know” option is beneficial when a question requires specific knowledge. Including a “Don’t Know” option can prevent guesswork and maintain the integrity of your data.

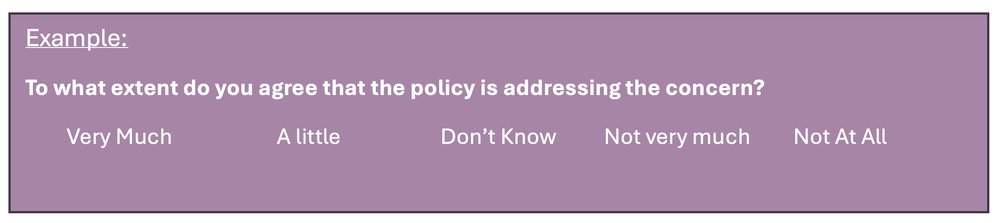

“Don’t Know” can also be used when asking an opinion-based question (e.g., how much do you agree…). In this case, “Don’t Know” may be your anchor point in the middle of your other response options. This allows your respondents to report that they don’t have a clear stance. More on this in the “Neutral Response” section below.

“Don’t Know” can also be used when asking about a future prediction, e.g., if you want to know if your respondents plan to use a service in the next six months.

I think most often “Don’t Know” and “Unsure” can be used interchangeably, but sometimes minor differences matter. I propose that “Don’t Know” is more about knowledge and “Unsure” is more about feelings.

Don’t Recall

Similar to “Don’t Know” and “Unsure”, “Don’t Recall” is a useful option when you are asking about a memory, past experience, or behaviour. Allowing respondents to select “Don’t Recall” reduces the risk of them reporting a false memory or a best guess.

Neutral Response

A neutral response, often labelled as “Neither Agree nor Disagree”, is a staple of the Likert scale. It allows respondents to choose a middle ground if they don’t have a strong opinion on a statement. However, there’s ongoing debate about the use of a neutral option. Some argue that it can lead to fence-sitting, while others believe it provides a valid choice for respondents without a clear opinion. Consider your survey goals and your audience before deciding to include a neutral option.

If you choose to omit a neutral option in a two-sided response list, you are doing what’s called “forced choice”, and your results may be more likely to provide you with insights and interpretations that align with the purpose of the survey. Sometimes that middle point gives respondents a way to answer quickly without thinking more deeply about their selection, leading to the potential that the collected data are not accurate.

Interpretation of the middle point can be problematic: does it reflect true neutrality or just indifference? Did the respondent not understand the question? Forced choice can offer more declarative data and reporting, but it can also turn off respondents who may genuinely be in that middle ground, and lead to more questions left blank.

Have you ever analyzed survey data and found that one or two questions have an abnormally high rate of neutral responses? This may actually be an indication that the wording is off, or you’re using a double negative that confuses people, so they select neutral in hopes of not being ‘wrong’.

Other, Please Specify

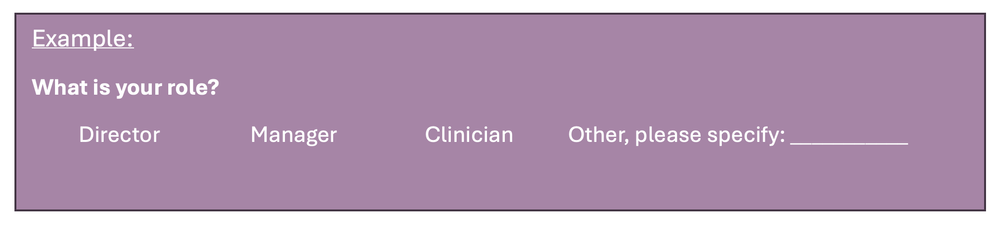

“Other, please specify” is a helpful option when you want to avoid short answer questions but know that your multiple choice or drop-down lists may not be exhaustive. If you know the top choices that are likely to be selected but know there may be some outliers, “Other, please specify” limits your need to analyze qualitative data and allows your respondents to give a true answer. I often use this for job titles or roles:

Summary

Including these middle-ground or opt-out response choices can help maintain data integrity and improve response rates. However, they should be used thoughtfully and sparingly, as overuse can result in non-committal responses and make it more difficult to find insights based on findings. However, they are absolutely necessary for some questions and if they’re missing, they can lead to frustration and survey abandonment, or worse, false responses. Always pilot test your survey to ensure that these options enhance, not detract from, your data collection.

Have you heard of “satisficing”? It sounds like a made-up word but it’s not. Satisficing is when respondents give a “good enough” answer instead of cognitively taxing themselves to come up with a true-er response. It makes surveys easier to do because you don’t have to think deeply about each question, but it’s terrible for interpretation and findings!

Whether or not you choose to include these response options may involve considering your sample size and your target audience. If your sample is small and you want to avoid fence-sitting, forcing choices may be appropriate. If your target sample may already have some barriers or struggles with responding to a survey, allowing for some opt-out responses may also be appropriate.