This is an Eval Central archive copy, find the original at drbethsnow.com.

Preconference Workshop: Transformative Mixed Methods: Supporting Equity & Justice by Donna Mertens

A key reason that I decided to take this pre-conference workshop was because I wanted to learn from Donna Mertens. I really like her writing and wanted to have a chance to learn from her in person. She did not disappoint! While I didn’t find there was much about mixed methods, per se, there was a lot about transformation, equity, and justice. Here are some things I learned/re-learned:

- In France there is a law against the government collecting data on race. It comes from WWII when government data on Jewish people facilitated the ability to send Jewish people to concentration camps.

- Ethics is the start of every decision we make in evaluation.

- If you are not challenging oppressive structures, you are complicit in the status quo.

- You can challenge your client/commissioner – e.g., if they ask for a survey for a summative evaluation, you can ask them if that’s really going to be transformative.

- mixed methods – what is the synergy between the quant and the qual? what do you gain by bringing quant and qual in dialogue with each other?

- transformative paradigm

- axiology (i.e., nature of ethics & values) – culturally responsive, promotes social/environmental/economic justice and human rights, address inequities, reciprocity (what do you leave the community so they can sustain the change when the evaluator leaves?), resilience, interconnectedness (living & non-living), relationships

- ontology (i.e., nature of reality) – reality is multi-faceted, historically situated, consequences of privilege

- epistemology (i.e., the nature of knowledge & relationship between knower and that which would be known) – interactive, trust, coalition building

- methodology (i.e., nature of systematic inquiry) – transformative, dialogic, culturally responsive, mixed methods, policy change as part of methodology

- transformative mixed methods design:

- Build relationships

- often historical experiences of research/evaluation that are extractive and oppressive; researchers need to earn trust

- identifying existing community actions groups and understand the history of their efforts; identify formal & informal leaders; identifying community needs/gaps/strengths/assets

- Contextual analysis

- cultural, historical, political, environmental, legislative, power mapping

- policy analysis (what’s written and unwritten; what’s written by not enacted)

- Pilot interventions

- collect data, make mid-course corrections

- Implement intevetnion

- collect data for proces evaluation

- collect data on unintended/unanticipated outcomes

- Determine effectiveness

- outcome evalaution

- Use findings for transformative purposes

- include in contract the importance of working with the community – from relationship building at the start all the way through to sharing the findings at the end

- if the community is involved throughout the evaluation, they will already know the findings and will not need to wait for the final report to find out (also, reporting findings along the way will make sure you are reporting data back to community in a reasonable time)

- Build relationships

- You can say that you’ll work with an expected goal of reducing inequities and increased justice and that you’ll work in respective ways; you can’t guarantee that you’ll make things better and can’t guarantee you won’t cause harm, because we don’t know what will happen

- http://transformativeresearchandevaluation.com/

Opening Plenary: Re(Shaping) Evaluation: Decolonization, New Actors, & Digital Data. Edgar Villanueva interviewed by Nicky Bowman.

Villaneuva wrote the book Decolonizing Wealth. I will admit that I have this book in my big pile of books to read, but hadn’t got around to reading it! After hearing this keynote, I’m even more excited to read it. Here are some things he said during the keynote that resonated with me:

- we learned the names of the colonizers’ ships (the Nina, the Pinta, the Santa Maria, the Mayflower), but not the names of the Indigenous lands and people

- colonization is like a virus that wipes out anything that is not like the dominant culture

- the US is working on Truth & Reconciliation legislation re: Indian Boarding Schools

- none of us has ever lived in a world that wasn’t actively being colonized. It can be violent and it can be subtle.

- we can’t collectively heal without acknowledging how we got here

- we need to change 4 things:

- people: more diversity of perspectives in leadership

- resources: who has them and who makes decisions about them. who has the microphone.

- stories: need to shift away from the deficit mindset, see the strengths

- rules: spoken & unspoken policies, need more equitable policies, but also need to become aware of and change the unspoken rules that limit our work

Concurrent Session: Walking the talk: Bringing Ontological Thinking into Evaluation Practice by Jennifer Billman and Eric Einspruch

Journal article: Framing Evaluation in Reality: An Introduction to Ontologically Integrative Evaluation

Thursday Plenary: Co-creation of Strategic Evaluations to Shift Power Moderator: Ayesha Boyce Speakers: Elizabeth Taylor-Schiro/Biidabinikwe, Gabriela Garcia, Melanie Kawano-Chiu, and Subarna Mathes

Here are some things that the panelists said that resonated with me:

- Ayesha Boyce:

- equity is context-specific

- Gabriela Garcia:

- equity is not enough. The next step is collective liberation

- At Beyond, they use a culturally-responsive evaluation framework, start all evaluations in a visioning session, ensure the evaluation is grounded in community values

- Elizabeth Taylor-Schiro/Biidabinikwe:

- communities striving for collective liberation don’t have power and that’s the problem. Power is needed to draw on their strengths, move toward sustainability and self-determination

- it should be the community leading the evaluation, supported by evaluators, rather than ‘co-creating’ the evaluation

- Melanie Kawano-Chiu:

- whoever funds the evaluation gets to make the most decisions – that’s a bias we hold

- ableism = there is a “norm” and if you fall outside of it, you aren’t good enough

- “Nothing about us without us” comes from the South African disability community

- Disability Rights Advocacy Fund formed after the UN Convention on Rights of People with Disabilities

- Subarna Mathes:

- if rigour = degree of confidence that the program has led to an outcome [different than rigour in the post-positivist sense]

- we need to push against the view of rigour that is narrowly defined, that prioritizes a worldview of “one reality” or “objectivity”

Concurrent session: Interactive tool to promote responsible use and understanding of culturally responsive and equity-focused evaluation by Blanca Guillen-Woods, Felisa Gonzales, Katrina Bledsoe, Kantahyanee Murray

- https://slp4i.com/the-eval-matrix/ is an online tool that helps you to choose from various different equity-focused/culturally-responsive evaluation approaches

- 7 key principles, 3 focus areas (individual, interpersonal, structural levels)

- This tool is really cool and I’m definitely going to share it with my students, as they often ask how to choose an approach (or approaches) when designing an evaluation

Concurrent Session: Design Sprint: How Researchers Can Share Power with Communities Involved in Evaluations by Gloriela Iguina-Colón and Brit Henderson

These presenters took us through a workshop on power sharing. Here are some things that they talked about that resonated with me:

- power is often thought of in the sense of authority, control – power over other people or things

- MLK descrbied power as “Power properly understood is nothing but the ability to achieve purpose. It is the strength required to bring about social, political, and economic change.”

- can have power with (collaborate with others to find common ground), power to (believe in people’s ability to shape their own lives), and power within [which I didn’t catch the meaning of in my notes so I just Googled it and found this: “the sense of confidence, dignity and self-esteem that comes from gaining awareness of one’s situation and realizing the possibility of doing something about it.”]

- power levers:

- resources

- access

- opportunities

- power sharing = recognizing the power levers that you have and actively choosing to leverage these to build collective strength

- positionality – “how our social identities and experiences influence the choices we make in the research process and how those factors shape the way others see us and give us power and/or insight in a specific research context.”

- consider experiences (interactions with the topic; lived experience of the topic), social identities (it’s context-dependent which are valued or not valued), perspectives (about the topic; understanding systems of oppression); identifying these can provide helpful insights (e.g., when you share an identity with participants) and biases (e.g., when you don’t share an identity (or an intersection of identities) and have assumptions/biases)

- in addition to individual positionality, think about team positionality

- consider experiences (interactions with the topic; lived experience of the topic), social identities (it’s context-dependent which are valued or not valued), perspectives (about the topic; understanding systems of oppression); identifying these can provide helpful insights (e.g., when you share an identity with participants) and biases (e.g., when you don’t share an identity (or an intersection of identities) and have assumptions/biases)

- reflectivity – “an attitude of attending systematically to the context of knowledge construction, especially to the effect of the researcher, at every step of the research practice.

- examination, attitude, process related to the topic; not just about identifying these things, but also what insights this will give me and where I might have knowledge gaps

- opportunity spaces: “points in the [evaluation] process during which you can apply power levers to facilitate meaningful participation among and share decision-making power with

- each of the steps of the evaluation process is an opportunity for meaningful participation: evaluation design, data collection, data analysis, interpretation of results, and dissemination

- facilitation – provide enough structure so everyone can be heard; be mindful of different views of evaluation

- when considering the key people/groups in an evaluation, ask:

- who has the most power/privilege in this context?

- who will be most impacted by the evaluation?

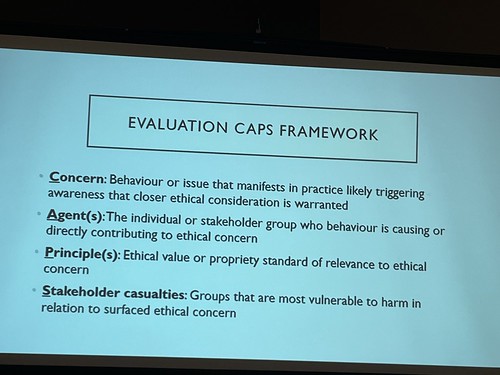

Concurrent Session: Ethics for Evaluation: Can We Go Beyond Doing No Harm to Tackle Bad and Do Good? by Penny Hawkins, Donna Mertens, and Tessie Catsambas

Concurrent Session: Equitable Evaluation Discussion Guide by Maggie Jones, Natasha Arora, and Elena Kuo

- Centre for Community Health & Evaluation at Kaiser Permenated (Seattle) [Seemed quite similar to CHEOS)

- equity-focused conversation about the evaluation design with someone from their organization who is not part of the project to get a different perspective

- they created a guide that includes pre-work before the meeting, them a meeting where you do a consultation with reviewers

- helped them to think from multiple angles (not just “what’s in the RFP?”)

- helped them to discuss assumptions and implications

- articulate what they can and cannot do to address equity

- might not be able to do something in the current evaluation, but if you don’t identify ideas, won’t ever do them – so may be something to put in the next proposal if it’s too late to do it in this proposal

- they tell funders that the EDI reviews is part of their process (i.e., we will develop the plan, put it through the EDI review and may come back to the funder with new ideas)

- ultimately would like to have a systematic follow up process where people will document what they do (trying to document changes that happened due to the EDI review process) to build evidence if this process makes a difference

Concurrent Session: Identifying Gaps in the Research on Professionalizing Evaluation: What Do We Need? by Amanda Sutter, Esther Nolton, Rebecca Teasdale, Rachael Kenney, Dana Wanzer

Concurrent Session: Creative Practices for Evaluators by Chantal Hoff & Susan Putnins

- reminded me a lot of Jennica & May from ANDImplementation

Concurrent Session: Who Are We? Studies on Evaluators’ Values, Ethics and Ontologies by John M. LaVelle, Michael Morris, Clayton Stephenson, Scott I Donaldson, Justin Hacket, Paidamoyo Chikate, Jennifer Billman

- VOPEs have ethics, standards, and competencies, but we as evaluator interpret them through our own lenses

- values = a set of goals and motivations that serve as a guiding group of principles, affect decisions/attitudes/behaviours, come from many sources, influence our practice

Concurrent Session: Mapping Distinctions in the Implementation of Learning Health System (LHS) by Anna Perry & Dough Easterling

- from National Academy of Medicine, but concept is too high level and ambiguous to guide the actual work of becoming a LHS

- in the US, electronic health records adopted in early 2000s, Afforable Care Act required the use of data to inform the health system

- Academci Helath Centres not early adopters of LHS because they were focused on research to build knowledge vs. continuous improvement type stuff

- hypothesis is that LHS is supposed to improve patient care, patient outcomes, and staff satisfaction (since they are more engaged)

Concurrent Session: Who are We? Studies of Evaluator Beliefs, Identify, and Ethics by Rachel Kenney, Bianca Montrosse-Moorhead, Amanda Sutter, Christina Peterson, Rachel Ladd, Betty Onyura, Abigail Fisher, Qian Wu, Shrutikaa Rajkumar, Sarick Chapagain, Judith Nassuna, Latika Nirula

- Ladd & Peterson discussed consensual qualitative analysis

- Tin Vo presented on behalf of Betty Onyura, who was not able to attend. Talked about how the commodification of evaluation work is in tension with trying to support equity and social justice

- an audience member suggested the word “constituent” instead of “stakeholder” [as a lot of us are trying to find a word to replace “stakeholder”]

Concurrent Session: Playing with Uncertainty: Leaning into Improv for Effective Evaluation by Daniel Tsin, Libby Smith, and Tiffany Tovey

- improv as reflection-in-action

- improv as a mindset – every idea matters

- thinking on your feet, using a different part of your brain, building on ideas, chance to be brave – can all be useful in evaluation

- activity: Zip Zap Zop – toss a ball and say “zip,” “zap”, “zop” in that order and when someone drops the ball, we all cheer “woop!”

- a chance to experience failure and turn it into a celebration

- shared experience of a group

- a plan for when we messed up

- have to pay attention the whole time – not planning what to do, but being present, acknowledging what is being said/done

- facilitator is not in control

- activity: Yes, and…

- “and” is generative, while “but” feels more like you are shutting someone down

- you will notice “but” in every day life when you could have used an “and”

- sometimes you want to be generative and sometimes you want to prioritize (e.g., don’t want to keep “and”ing when building a program ToC and end up with trying to do everything).

- Adrienne Marie Brown’s Emergent Strategy

Concurrent Session: What Should I Do? Examining Uncertainty, Decisions Points, and Pushback in Evaluation Practice by Rebecca Tesadale, Tiffany Tovey, Grettel Arias Orozco, Julianne Zemaitis, Onyinyechukwu Onwuka, Cherie Avent, Christina Peterson, Allison Ricket, Mandy White, Kelli Schoen, Daniel Kloepfer, Natalie Wilson

- evaluations require interpersonal skills, but it’s not taught in evaluation courses or in evaluation texts

- it’s a human tendency to be defensive and as a conversation proceeds, defensiveness will increase as what a person hears can become a distortion of what the message was

- Kahlke, 2014 – Generic Qualitative Approaches: Pitfalls and Benefits of Methodological Mixology

- Braun & Clarke, 2022

- evaluators are always dealing with uncertainty

- different people have different level of tolerance for uncertainty (and an evaluator’s tolerance might be different than that of the people they work with)

- aspects of uncertainty”

- probability – quant representation about the amount of uncertainty

- ambiguity – different ways to interpret findings

- vagueness – how detailed the language is

- take with client before you start – what is the stake of the decision? What is their tolerance for university how certain do they need to be? This can inform choice of methods, etc.

- uncertainty can be leveraged to drive transformational change by creating dialogue about the unknown and asking more interesting questions about the unknown (e.g., if data is not available, ask why there is no data)

My post conference “to do” list

- read Decolonizing Wealth

- read all the various articles that I took note of

- order System Evaluation Theory: A Blueprint for Practitioners Evaluating Complex Interventions Operating and Functioning as Systems by Ralph Renger (I chatted with Ralph at the book fair, but they didn’t have any books to buy at the book fair – just a chance to talk to authors)